Intro to SGX: from HTTP to enclaves

June 21, 2018

Intro to SGX: from HTTP to enclaves

I recently submitted a design for the SGX infrastructure we are building at R3, and another design for the integration of SGX into Corda. Although the primary use case is Corda, we are trying to build an agnostic SGX-in-the-cloud infrastructure. In this blog post, I’d like to give an introduction to the underlying tech.

Why should I care?

In one sentence: SGX provides a way to offload sensitive data processing to remote untrusted machines.

Trust is implied

It’s not simple to use, it’s not yet hardened by years of constant hammering from users, there are a ton of things we haven’t figured out yet, but the tech itself makes sense and I think it may revolutionize the way we think about privacy and security in the future.

It does this by providing a way to check what code is running remotely. To quote from this great article:

“Instead of directing resources to the elimination of trust, we should direct our resources to the creation of trust”

Well, SGX allows us to do just that.

Is this like ZKP/homomorphic encryption?

Yes, but no. It’s not new crypto magic, although a lot of crypto is involved. It hinges on the hardness of breaking the hardware rather than the hardness of breaking a math problem. In terms of features SGX is much more powerful than ZKP/homomorphic encryption as it allows running (almost) arbitrary computations, including multiparty ones.

I don’t believe you

Good. Let me try to convince you.

The heart of SGX is a process called attestation, which is the establishment of trust in a remotely executing program. To understand how this is done and how it is different to existing technologies it’s instructive to look at HTTP and HTTPS first.

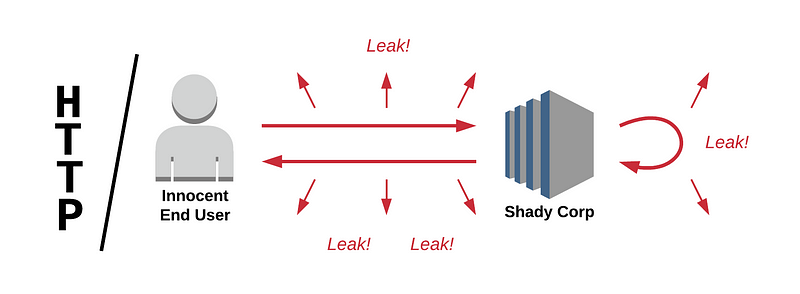

When you open an HTTP webpage, say http://example-unsafe.com, your browser establishes a TCP connection to the remote server and sends a plain text HTTP request over it. The server then sends a plain text reply. Any intermediate node that the packets pass through can intercept, read or modify the packets. This is called a man-in-the-middle attack, and it allows an attacker to impersonate websites and sniff on traffic.

Furthermore there is no control over how the data is used by the server. This means that as soon as the data leaves the end user’s device it’s completely visible and modifiable all the way through. Not good.

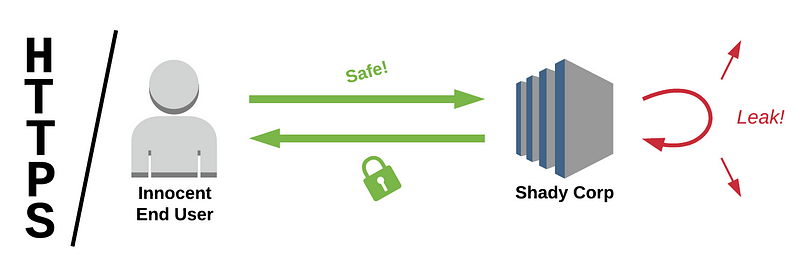

In order to fix this we have a safer version of HTTP called HTTPS, which inserts a TLS layer between the TCP and HTTP layers.

When you open an HTTPS webpage, say https://example-less-unsafe.com/ your browser establishes a TCP connection to the remote server, and use it to start the TLS protocol. During handshake the remote side will send your browser a certificate chain which your browser proceeds to verify. First it checks that the root public key in the chain is in the browser’s list of trusted roots. Then the browser proceeds to check the chain itself, which is a sequence of signatures, one key signing the next, all the way up to the server’s key.

This process establishes trust in the server key that can now be used to create a shared secret between the client and the server through a process called key exchange. This fresh key in turn can be used to encrypt the actual HTTP traffic flowing between your browser and the server.

If none of the involved keys are compromised, this protocol can prevent man-in-the-middle attacks. The certificate check ensures that the remote side is in fact who they claim to be (identity established when the remote side registers their key with a Certificate Authority), and the encryption ensures attackers cannot tamper with the traffic.

Note however that this process only establishes trust in and secures the connection itself. All the cryptography becomes moot if the entity running https://example-less-unsafe.com decides to reveal the traffic to other parties.

The issue is that the encrypted traffic must be decrypted within the server process, and the decrypted data is then exposed to all programs running on the server machine. This is completely beyond the control of the client.

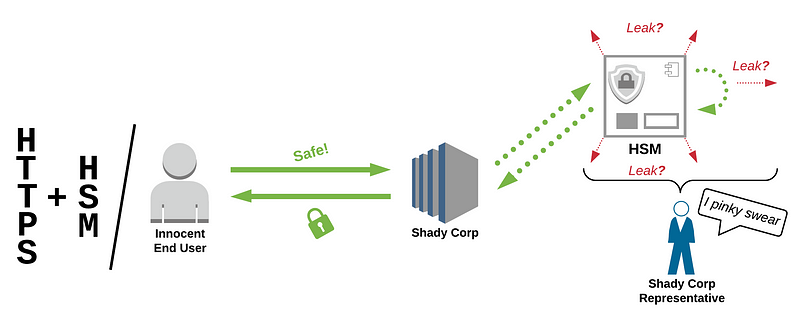

One way to solve this is to put the server side traffic processing into a Hardware Security Module. Such a device offers physical protection against tampering with the data flowing through. This means an HSM can be used to do the cryptography, and perhaps even the processing of sensitive data, as some HSMs allow execution of specially developed software. However, even if we did all this, clients must trust that the website does indeed use these HSMs, there’s no way to verify this claim.

Almost there

This is where SGX and attestation comes into the picture. SGX is basically a mini HSM sitting in modern Intel CPUs, but it goes one step further. What if during the establishment of trust clients could also verify what code is operating on the other side? What would we need to do this?

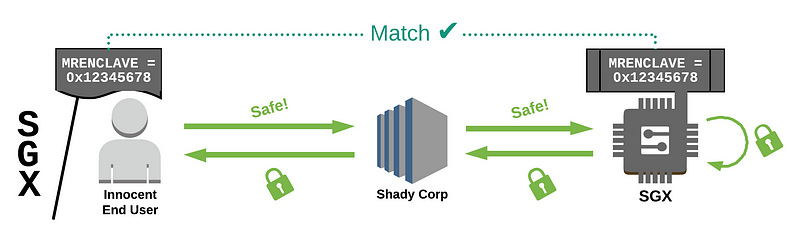

First of all we need a way to identify the code that’s running remotely. For this we can simply hash the server’s executable image. In SGX parlance the executable is called the enclave and the hash is called the measurement or MRENCLAVE.

Once we identified the code, we need to prove that the remote side is in fact executing it, and not something else.

In order to do this, SGX CPUs use a burnt in key that’s unique to that specific chip to sign a so called report that the executing enclave produces. The report includes the enclave’s measurement, arbitrary data that the enclave provides (usually a key, or hash of) as well as other data like the microcode version.

How can we verify that such a signature over a report comes from a genuine Intel chip? We can’t. But Intel can, and this is what their Intel Attestation Service is for. They have a REST API to send such signed reports to, and if the report is valid and signed by a genuine Intel CPU then the IAS will reply with an OK, signed with Intel’s root key.

So, binary produces report, it’s signed by the CPU, which is signed again by Intel if the CPU key is genuine.

Note: this is a simplification, the real protocol is more complex and includes an additional “EPID provisioning” step, the CPU key isn’t used directly.

So, with such a signed report an SGX client can verify that a report is coming from a specific executable. Great!

The loop is closed

However, this is still not sufficient. Even if the client knows what hash is running on the other side, how can they know that the executable isn’t doing anything funny?

In order to establish trust in the measurement we need a deterministic mapping from the server’s source code to the measurement. The source code must be made available to the client, and the binary’s build must be made completely reproducible, to the bit level. And even then, the vast majority of users will not look at the source code or reproduce the build! We need some kind of auditing process that produces corresponding signatures which users can in turn trust.

The issue is very similar to smart contract code. How many Bitcoin users checked the script that does the spending check? How about Ethereum contracts? This is a point on SGX I haven’t seen discussed anywhere. I’m thinking it’s because the primary use case Intel had in mind for SGX is DRM and similar tech, where the creator of the enclave and the user of the enclave are one and the same entity. “We wrote the enclave and we trust our programmers, so it’s enough to check that the remote enclave’s measurement matches.”

However a lot of planned use cases don’t look like this. Take Signal for instance. They developed an enclave to do contact discovery, and even made it open source. This is great, but how many app users checked the code?

The security story of SGX relies on users or people they trust auditing the code and reproducing the measurement. I have some thoughts on how we could do this in a reasonable (and even fun!) way, perhaps in another post.

The security story of SGX relies on users or people they trust auditing the code and reproducing the measurement

Anyway. The full reasoning about trust from the client’s perspective goes like this.

- I got an HTTP response from Intel signed with their key, which includes an enclave report. This means the report actually does come from an enclave running on Intel hardware, and this enclave’s measurement is the same as in the report.

- The report contains a public key that the enclave created. I, or someone I trust, audited the source code resulting in the measurement, so I know that the key pair was in fact created by the enclave and the enclave won’t leak the private key. I also trust that the key cannot be extracted from the enclave by malicious attackers because of the various architectural decisions Intel made.

- Furthermore, during auditing, I made sure that any data decrypted by the enclave will only be used for its intended purpose.

- This gives me relative confidence that I can proceed with the key exchange and encryption, the traffic can only be decrypted by the enclave image, and it won’t be leaked elsewhere, not even to the administrator of the server.

We can also think of this as a modified version of usual certificate chains. The root “Certificate Authority” is Intel, but the signed identities aren’t registered entities like companies, but rather specific pieces of code! How cool is that!

Disclaimer

The above description is a simplified overview of how SGX works, the actual tech is much more involved.

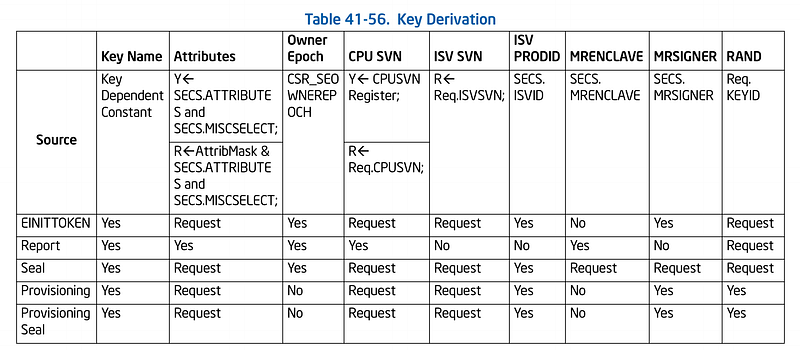

When I first started working with SGX a lot of the details seemed very mysterious. No matter which documentation I started reading they were all either way too high level or way too low level, and sometimes even both. The tech jargon is heavy. What’s the difference between ISVSVN and CPUSVN? What is an Extended Group ID? What does it mean for an enclave to seal to MRSIGNER?

Put simply, the tech is complex. A lot of it is very well documented, the instruction reference being a good example. But some of it is less so. What do the LE, QE, PvE and PCE enclaves do? What’s aesmd for?

It seems that SGX has many different aspects, but it’s hard to find a simple top-down description. Hopefully this post gave some intuition into the possibilities. In the upcoming posts I’ll try to slowly break down the tech, focusing on the software side of things. I would also like to point interested readers to this paper, which is an excellent beefy deep dive into SGX.

Conclusion

SGX opens up the possibility for a user to check precisely what a remote party is doing with their data. It’s still a new tech, there are and will be plenty of issues to overcome, but it will open up a way to give end users unprecedented control.

In the next post we’ll explore how an enclave is loaded(EINIT), how it can access the CPU keys(EGETKEY), and how these relate to Intel provided enclaves.